(If you want to read about how I got here, check this out. If you just want a guide to do this, the backstory doesn't matter, so just keep reading this page.)

- Install Wheezy. However you normally do; all the defaults are fine. If you feel like it, you can halve the size of swap and add that back in to the system partition. Or you can do this later with gparted, or you can leave it and have twice as much disk devoted to swap as the Debian installer thinks you'll need.

- Upgrade to Jessie. Also in the normal way.

- Boot into an alternate Jessie environment. Or at least something with recent btrfs-tools. Ubuntu may work, but I made a custom Jessie iso on the Debian live-systems build interface. This tool is really cool, someone's dedicating a lot of server time to make this thing happen and I think it's awesome. On the downside, you'll probably have to wait a few days before you make it to the top of the queue, and once your "build finished" email is sent out, you'll have to download the iso in the 24 hours before they delete it.

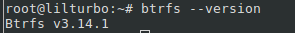

- Install btrfs-tools in the live boot. Once booted into the new environment, we'll need btrfs-tools, of course. btrfs --version to make sure you've done stuff right - if you're using the ancient Wheezy 0.19 version this stuff may not work right. The correct version should sound like a kernel version number, mine is currently π:

- Convert the just-installed ext root to btrfs. I got most of my instructions on this step from the occasionally wonderful btrfs wiki. It doesn't matter if your root is ext3 or ext4 - in fact, these steps may even work with ext2, how should I know. The steps go something like this:

root@serv$ # run fdisk -l as root to make sure you're using the hard disk's root filesystem partition for these next steps root@serv$ fsck -f /dev/sdX1 root@serv$ btrfs-convert /dev/sdX1

Use the following optional but prudent steps to make sure your data survived:

root@serv$ mkdir /btrfs && mount -t btrfs /dev/sdX1 /mnt/btrfs root@serv$ btrfs subvol list root@serv$ # find the name of the saved subvolume, something like extX_saved root@serv$ mkdir /ext_saved && mount -t btrfs -o subvol=extX_saved /dev/sdX1 /ext_saved root@serv$ mkdir /orig && mount -o loop,ro /ext_saved/image /orig

Yep, that's a triple mount. The contents of the last mount should be the same as the contents of your root filesystem. Check anything important or customized, and, if you're satisfied and want to set everything in stone: - Modify fstab. Make sure you change fstab or your system isn't going to boot, fool. Use blkid to get the UUID of the boot partition and make sure this matches the entry for your / in fstab (I don't think the UUID will change but I can't remember). Then make sure the line looks something like this:

- Pop out the alternate boot media and reboot. Sometimes, when emerging from deeply nested sessions, chroots, or alternate boot environments, don't you feel like Cobb waking at the end of Inception? Anyway, you should be booted into the newly buttery root of your recently installed system now.

- Verify integrity and clean up. I know that shit's boring, yo, but we're gonna do it anyway.

- Add the secondary drive and partition. To get the second drive partitioned properly, I simply popped in the second drive and dd'ed the existing disk to the second one.

- Convert to raid1 live! "Fuck it, we're doing it live." Yeah, computers are pretty cool I guess. From here.

- And we're done! Isn't it great? Hypothetically, one of our drives can fail and we'll still be able to boot! I think we might be screwed if the boot partition gives us trouble, but I'm not realy sure yet.

root@serv$ vi /etc/apt/sources.list :%s/wheezy/jessie/g :%s/stable/testing/g :%s/^deb-src/#deb-src/g :wq root@serv$ apt-get update root@serv$ apt-get dist-upgrade -y

:)

root@serv$ btrfs subvol delete extX_saved root@serv$ umount /orig root@serv$ rm /ext_saved/image root@serv$ umount /btrfs /ext_saved root@serv$ rmdir /orig /ext_saved /btrfs

UUID=deadbeef-beef-dead-beef-deadbeefbeef / btrfs noatime,ssd,discard,space_cache 0 0Yes, it is correct that btrfs roots get a 0 for passno, the last number - this means don't worry about running fsck, since fsck.btrfs is just a feel-good utility anyway. They only released it to fit in, the whole story's in the manpage, which is a pretty good read, btw.

root@serv$ man fsck.btrfsAnyway, back to stuff that matters - don't just blindly use those mount options in my fstab line - if you use ssd on a drive that isn't an ssd, you'll probably have a bad time. I didn't turn on certain options like autodefrag and compress=lzo becuase this is intended to be a VM server, and also probably because I don't know what I'm doing. Check this out, the corresponding page on the ever-helpful wiki. The whole thing is worth a read, make some damn decisions of your own!

root@serv$ btrfs subvol delete ext_saved root@serv$ # allegedly you can verify with btrfs subvol list -d /, but the manpage for the btrfs-tools version pi on Jessie didn't have this documented root@serv$ btrfs fi defrag -r / root@serv$ btrfs balance start /

root@serv$ dd if=/dev/sdSETUPDRIVE of=/dev/sdNEWDRIVE bs=32M # don't fuck this up, mmk?This will clone our boot, system and swap partitions to the new drive. For general applications, I recommend halving each swap. Even though I never had you modify the swap part of fstab, Linux is smart and will find and use all swap partitions attached to the computer.

root@serv$ btrfs fi show # to see which device is mounted as root root@serv$ fdisk -l # to see which device will be added to form our raid1 (aka, which one is NOT root) root@serv$ btrfs device add /dev/sdNOTBOOT1 / root@serv$ btrfs balance start -dconvert=raid1 -mconvert=raid1 -sconvert=raid1 -f /The last command complains if you try to convert system blocks to raid1 as well (-sconvert=raid1), which is why we use the -f flag, which has the ominous manpage description "force reducing of metadata integrity". But there is no information I could find out there regarding this, and I want to support complete failover, so this is what I'm using and it's working ok for now.

As always, the Arch wiki docs are unparalleled, peruse related info here. I hope it all worked, drop a line below if something didn't, or if something did!